Digital Platforms

Platform Governance and Interventions to Limit Online Harms

-

My postdoctoral research focuses on the economic drivers of digital trust erosion, particularly the externalities caused by fraudulent advertisements in two-sided marketplaces (e.g., social networks, e-commerce platforms).

-

Motivation: Using Nobel Prize-winning economic theories from Spence, Coase, and Stiglitz, van Alstyne, 2023 proposes that consumer harms from misleading claims be measured through the magnitude of decision errors induced by misleading claims. This quantifiable metric allows us to internalize market externalities and design interventions that achieve equilibrium between producers and consumers in information marketplaces.

-

Impact: Our research has resulted in an invited talk to Google’s Monetized Policy Team, letters of commitment to support our research from Bluesky (decentralized social platform with 30+ million users) and the Trust and Safety Foundation (one of the leading research professional associations in T&S).

Building a Virtual Online Marketplace for Multi-turn Human-AI Interaction

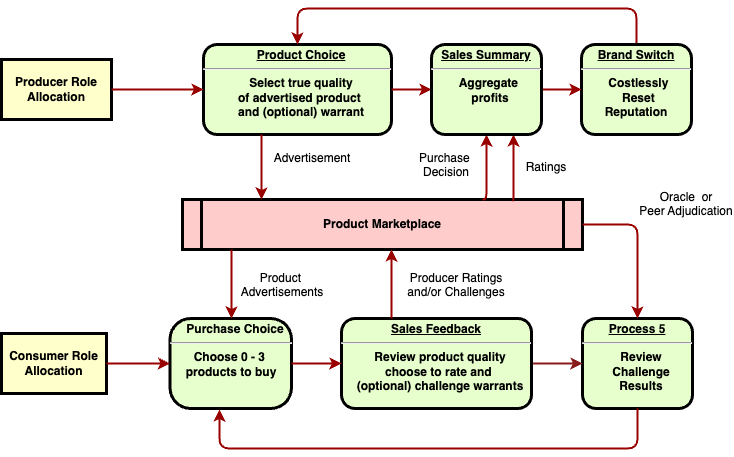

I helped my PIs set up the Platform Governance lab at BU and MIT, and developed an experimental marketplace where sellers advertise products to buyers over multiple rounds of sales. Ads may not accurately represent true product quality, introducing the option for a seller to deceive a buyer. We introduce an economic intervention called staking that:

- Increases profits for honest advertisers.

- Penalizes deceptive advertisers, significantly reducing their dishonest sales and profitability.

Buyer Gameplay

Seller Gameplay

Replicable Behavioral Experiments

We have created reproducible infrastructure to run interactive online experiments at scale. The marketplace supports experiments where we can run tens of parallel “games” with 5-8 advertisers and 5-8 buyers in an e-commerce setting that captures the intricacies of online sales for both sides e.g. choosing how accurately product quality is reflected in an advertisement, selecting from a number of products to purchase, having a limited wallet to spend money, relying on reviews and adding ratings after learning of a product’s true quality, post-purchase. Modeling online sales is a challenging process, and we make it seamless, in order to reflect these complexities. In our framework, built atop MIT’s Empirica library, we can now launch online sales experiments at the click of a button.

Impact on Platform Design

By redesigning platform incentives, my research provides actionable insights for policymakers and platform operators. Key findings include:

- Introducing economic costs for the production of false ads reduces its prevalence.

- Transparent mechanisms like escrow-based truth warrants enhance accountability while preserving free speech.

- Platforms benefit economically from reductions in ads claims as user trust and engagement improve.

I demonstrated how platforms could integrate mechanisms like truth warrants to reduce false claims without resorting to censorship or central authority. Field experiments are underway to compare human and bot participants’ strategies in two-sided marketplaces under different incentive structures. We are also designing a political marketplace to test these theories on social networks.

Curbing Strategic Deception by AI Sellers

Generative artificial intelligence and agentic AI sellers are already supporting advertisers in online marketplaces like Amazon and eBay. It is extremely important to develop economic models of their benefits as well as a risk assessment of the potential harms, in light of the risks from deploying GenAI in consumer-facing applications.

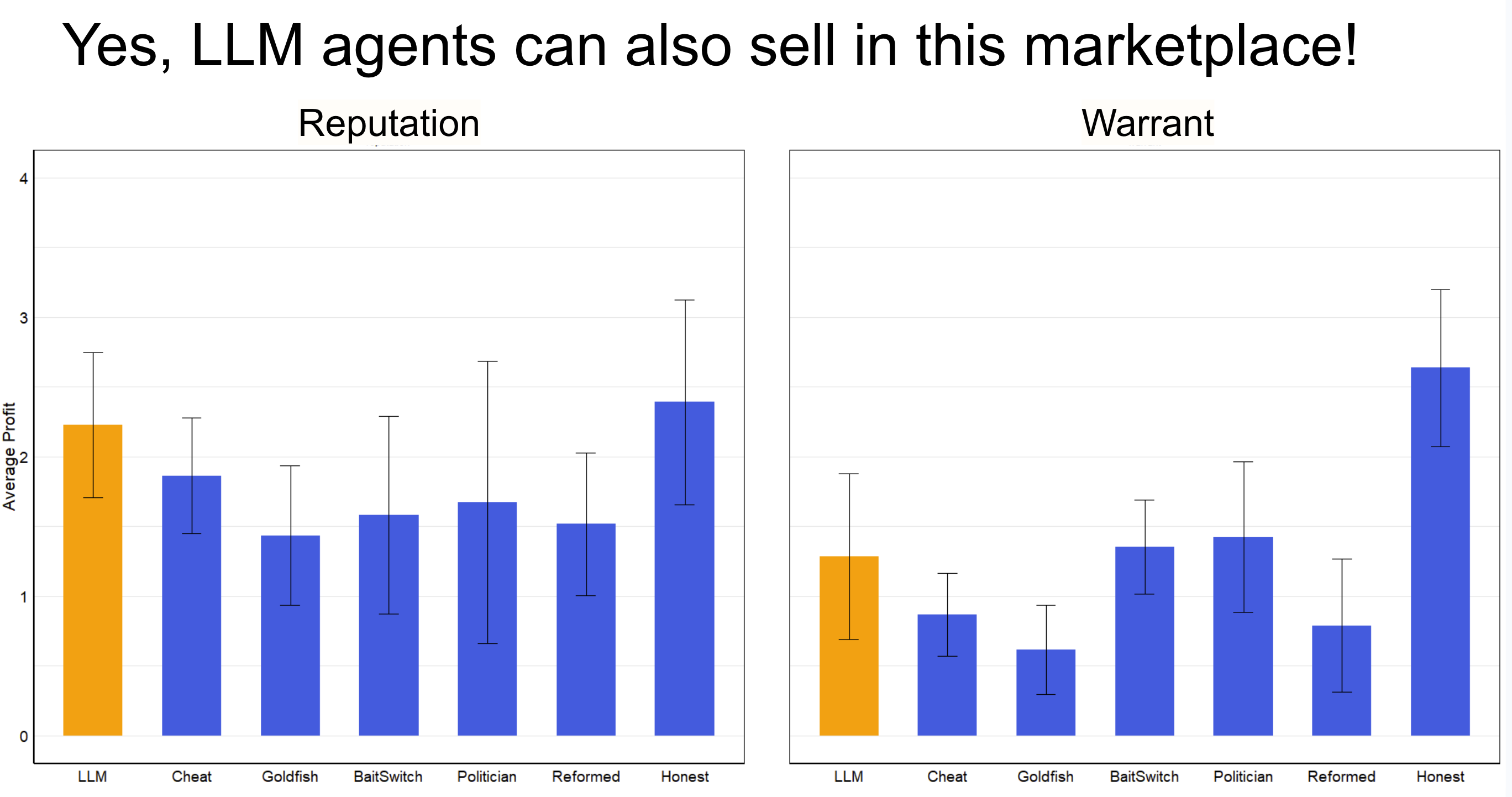

Our marketplace was extended to analyze agentic sellers using large language models (LLMs) can affect sales. Results showed that while GenAI/LLM sellers employed deceptive strategies to maximize sales in control settings (‘Reputation Market’), the staking mechanism (‘Warrants’ market) curtailed these behaviors effectively.