Recommender Systems

Building multimodal content distribution algorithms and testing their robustness to adversarial actors

Adobe Research - Multimodal Recommender Systems using Graph Neural Networks

- Paper: Open-domain Trending Hashtag Recommendation for Videos, S. Mehta et al., IEEE International Symposium on Multimedia (2021)

- Patent: Under review, Adobe Research

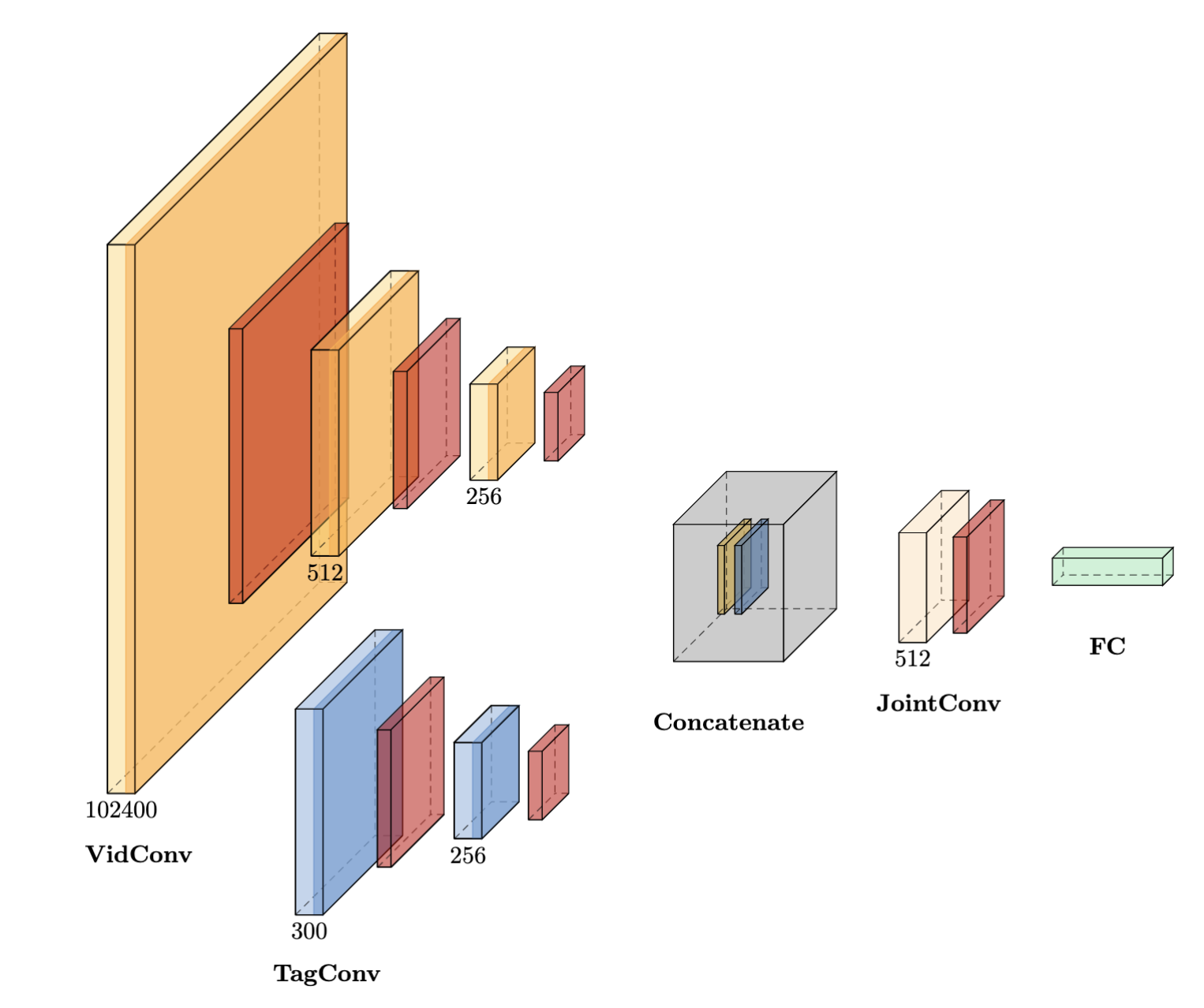

I built a production-ready graph-based deep learning recommender system at Adobe Research, focused on predicting trending hashtags for incoming videos by creators. This was deployed into production and a patent has been filed for the same.

Recommendations determine the type, ranking, and placement of most content appearing on our screen ranging from social networks to e-commerce sites and advertisements. A laser focus on personalization has led to a plethora of issues from bias and lack of interpretability to filter bubbles and echo chambers. My work at Adobe dealt with a zero-shot prediction problem, building a production-ready graph attention-network based system and a novel hashtag matching algorithm that, in combination, effectively matched trending hashtags with relevant videos for improving content discovery via all Adobe products. Part of our motivation was to develop a tool to address the content discovery problem exacerbated by poorly designed recommendations.

Most importantly, we solved a zero-shot learning problem where incoming “trending” hashtags are not in our training data and we need to predict accurate videos that they can be shared alongside. So we created a video-hashtag graph as our knowledge base on which we defined an edge prediction task between video and incoming hashtag nodes. This knowledge graph was continuously updated with new data so that retraining the graph neural network would not be necessary when deployed and dealing with novel trends.

Modeling the Amplification of Harms by Adversarial Gaming of Online Recommendations

To understand how modern ranking and recommendation algorithms like Reddit’s can be gamed, I recreated their algorithms and modeled how bad actors can manipulate each type of algorithm to attack, takedown, or spread harmful content on different subreddits! Our work (jointly with Oxford’s Torr Vision Group) received an Oral talk at the AI4ABM workshop at the International Conference on Machine Learning in 2022. Read the Paper.

I am also using simplified recommendation systems in SimPPL: A Social Network Simulator with Probabilistic Programs in order to simulate content spread and shilling attacks (bad actors using fake reviews to boost virality) and stimulate research on detection and control of misleading content online.